DeepSeek vs ChatGPT Comparison: Which AI Model Excels in Coding, Research and Logic Tasks?

This article will compare ChatGPT and DeepSeek from multiple perspectives, including coding, research, complex task handling, API pricing, and user costs.

Before comparing: 3 Basic ideas you should know:

The competition in artificial intelligence is getting fiercer, with DeepSeek and ChatGPT emerging as two highly respected AI models, each showcasing unique technical features. Before diving into my personal experience and comparisons, let's first understand three fundamental concepts:

1. Application of Reinforcement Learning (R1-Zero Model)

Traditional AI models usually rely heavily on large amounts of labeled data. However, DeepSeek’s R1-Zero model is entirely based on reinforcement learning (RL), eliminating this dependency. You can think of reinforcement learning as "learning by trial-and-error," where the model continuously attempts tasks and improves based on feedback (like whether the answer was correct or not).

In my experience, this makes DeepSeek particularly strong in logical reasoning tasks (such as mathematical proofs or coding), as it autonomously explores optimal solutions rather than relying on predefined answers.

Difference from ChatGPT:

- DeepSeek: Fully relies on reinforcement learning, emphasizing autonomous exploration.

- ChatGPT: Combines supervised fine-tuning and reinforcement learning, ensuring foundational accuracy and fluency first, then optimizing further through RL.

Simply put, ChatGPT is like a student who studies textbooks first and then practices, whereas DeepSeek is more like a self-taught expert who improves through constant trial and error.

Why this difference exists: ChatGPT, designed mainly for conversations, needs accurate and fluent responses early on, making supervised learning essential. Meanwhile, DeepSeek focuses on complex logic tasks, benefiting more from RL's autonomous exploration.

2. Multi-Head Latent Attention Mechanism (MLA)

Traditional AI attention mechanisms are like one person trying to handle everything at once—inefficient. DeepSeek uses a "Multi-Head Latent Attention Mechanism" (MLA), breaking tasks down into smaller, specialized groups:

- Some groups handle keyword analysis.

- Others manage contextual understanding.

This design speeds up training and maintains high-quality output with fewer resources. While ChatGPT feels like an all-around player handling tasks uniformly, DeepSeek acts more like a specialized team where each member excels in their own area.

3. Mixture of Experts (MoE)

MoE is about "specialized collaboration." DeepSeek contains numerous "experts" (submodules), activating only relevant ones for specific tasks:

- Math problems call the math expert.

- Coding tasks activate the programming expert.

This allows DeepSeek-V3 to boast 6,710 billion parameters while maintaining computational costs similar to a 370-billion-parameter model. It’s powerful yet efficient, like a team where each member focuses exclusively on their expertise, minimizing resource waste.

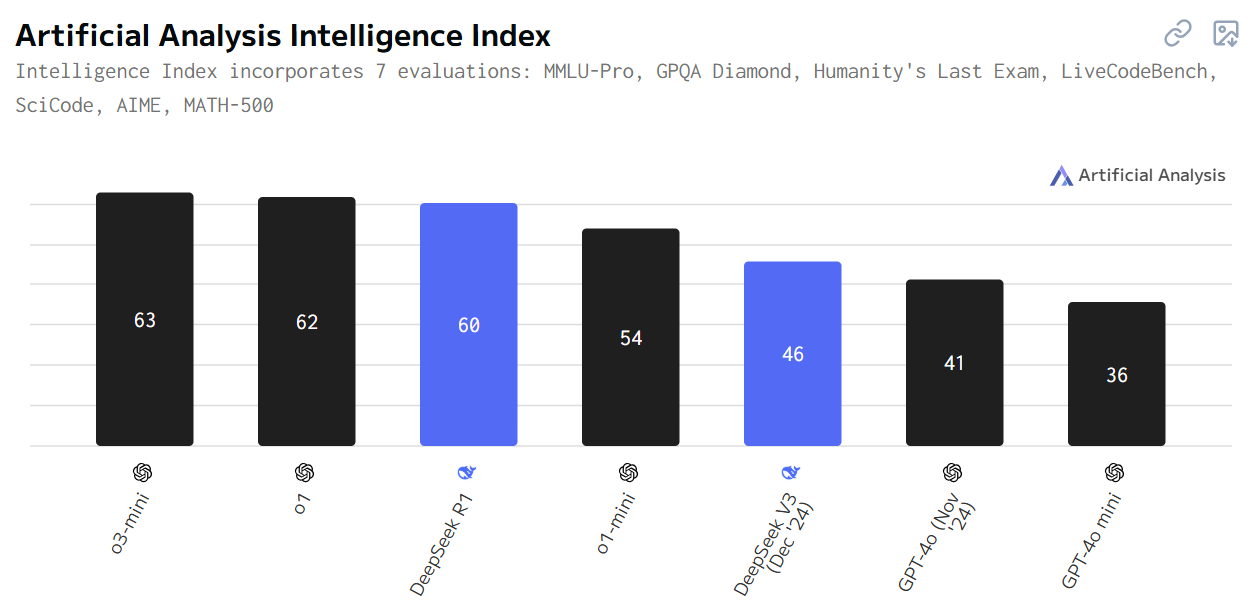

The following diagram provides a more comprehensive comparison of model capabilities.

Artificial Analysis Intelligence Index: Combination metric covering multiple dimensions of intelligence - the simplest way to compare how smart models are. Version 2 was released in Feb '25 and includes: MMLU-Pro, GPQA Diamond, Humanity's Last Exam, LiveCodeBench, SciCode, AIME, MATH-500. See Intelligence Index methodology for further details.

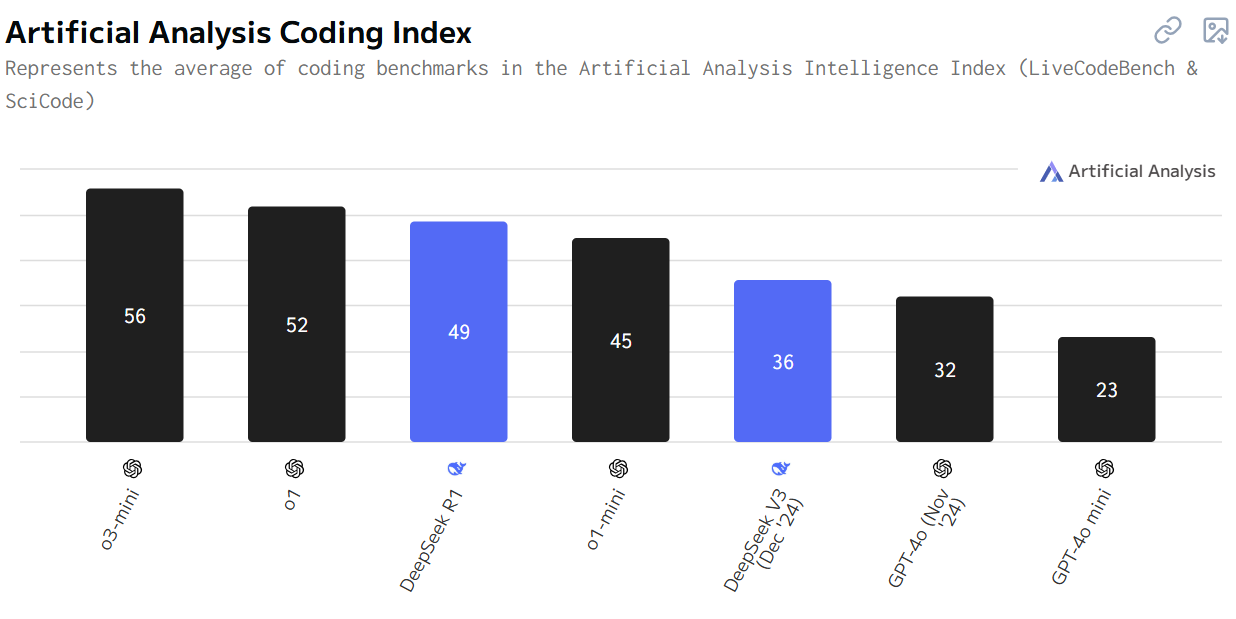

Chatgpt vs Deepseek for Coding

Artificial Analysis Coding Index: Represents the average of coding evaluations in the Artificial Analysis Intelligence Index. Currently includes: LiveCodeBench, SciCode. See Intelligence Index methodology for further details.

In real-world user applications, DeepSeek and ChatGPT each demonstrate their own strengths and weaknesses.

Personally, when dealing with R or Python tasks, I've found DeepSeek's code concise and immediately usable, often outperforming ChatGPT's outputs.

A user on Reddit also shared his experience using DeepSeek to handle Python tasks, noting that the code it generates is "ready to use" and is more efficient compared to ChatGPT's output.

However, I also looked at other bloggers' evaluations across various scenarios—history topics, research paper summaries, complex logic problems, and translations. DeepSeek usually excels in complex reasoning, while ChatGPT tends to perform better in programming tasks requiring iterative refinements.

Opinions vary among users. One Reddit user compared DeepSeek, ChatGPT (o1), and Claude, highlighting DeepSeek's direct usability in code generation, whereas ChatGPT (o1) sometimes missed dependencies, and Claude needed more guidance but produced robust code after iterations.

Comment

by u/Waterbottles_solve from discussion

in ChatGPT

Interestingly, some users noted DeepSeek’s unique "skepticism" in advanced math problems, doubting its own answers in subtle situations—potentially helpful for complex issues.

“I have found the opposite to be true, for some advanced math questions.r1 seems to doubt itself quite a lot, which can be helpful when dealing with subtledifficulties.” One user commented on Reddit.

Chatgpt vs Deepseek for Research

AI models sometimes "hallucinate," inventing information beyond their training data. This typically occurs when user demands exceed the model's training scope. Large Language Models (LLMs) tend to "please" users—they are more inclined to generate seemingly coherent answers rather than honestly admitting they don't know the solution. Therefore, the primary rule when using LLMs for research is: always verify the sources and locate relevant information within those sources.

In a report by Forbes, the author tested ChatGPT and DeepSeek with the following prompt: “Briefly explain how the fall of the Roman Empire influenced modern governance. Please link to sources.”

ChatGPT provided a comprehensive answer closely aligned with the core of the question, attaching direct links to sources after each paragraph. It concluded: “In summary, the fall of the Roman Empire prompted a shift from centralized imperial rule to decentralized governance structures, laying the foundation for contemporary political systems.”

DeepSeek, on the other hand, responded in a bullet-point format, offering clear logic but not fully addressing the question. For instance, it focused more on how Roman law (rather than the fall) influenced modern civil law systems and cited Edward Gibbon's The History of the Decline and Fall of the Roman Empire. This approach might be more suitable for academic research, but for users seeking quick access to directly relevant digital sources, ChatGPT proves to be more convenient.

Regardless of your choice, always verify sources yourself. AI should support your thinking, not replace it.

Chatgpt vs Deepseek for Creative Task Handling

In creative tasks, experiences differ. Many users, myself included, find DeepSeek's conversational style more human-like, expressing uncertainty naturally, enhancing creative brainstorming.

One user shared on Twitter: “The conversational experience with DeepSeek makes me feel like I’m talking to a real person.”

Deep Seek is miles ahead of ChatGPT.

— Olóyè. (@Ol0ye) April 6, 2025

I used exactly the same prompt and I ended up talking to DeepSeek like it is an actual human being.

ChatGPT gave me the same robotic responses.

In an experiment by PC Gamer, James Bentley asked ChatGPT and DeepSeek to suggest a gaming PC build under a $1,000 budget.

Initially, both AIs recommended configurations that weren't ideal, mainly because they included outdated RTX 30-series GPUs, which aren't a smart choice by 2025. Other parts like the Ryzen 5 processor, B550 motherboard, 16GB DDR4 RAM, and 1TB SSD were basic but reasonable, lacking any special optimization.

When DeepSeek's "reasoning mode" was turned on, its suggestions became more logical and organized. It offered detailed explanations for each hardware choice and considered compatibility issues. However, this "reasoning" was mostly just basic calculations, not real internal thinking.

Interestingly, both AIs suggested PCIe 3.0 SSDs, even though PCIe 4.0 SSDs are widely available at reasonable prices. This shows the limitations of large models in understanding the hardware ecosystem—they are good at calculations based on existing data but struggle with recognizing technological trends. Additionally, since their training data often lags behind the latest advancements, this further limits their performance in fast-changing fields.

DeepSeek vs ChatGPT: Cost Efficiency and Pricing Comparison

Model API Pricing: DeepSeek's Advantage

For personal users, DeepSeek’s free open-source versions and lower cloud API costs provide excellent value, further enhanced by its energy-efficient MoE architecture.

- DeepSeek: Processing cost per million tokens is $0.48. During off-peak hours (UTC 16:30–00:30), users can enjoy a 75% discount, reducing the cost to as low as $0.12 per million tokens.

- ChatGPT: Depending on the model version, the cost ranges from $3 to $15 per million tokens.

Cost-Effectiveness Comparison:

DeepSeek's regular API cost is only 1/6 to 1/30 of ChatGPT's, with extreme scenarios showing up to a 200x difference.

Training Cost Distribution:

DeepSeek V3's training budget is $5.5 million, significantly lower than GPT-4's estimated $100 million investment.

Open-Source Ecosystem Support:

DeepSeek leverages open-source models to reduce enterprise customization costs, whereas ChatGPT relies on a closed-source tech stack, unable to offer similar cost optimizations.

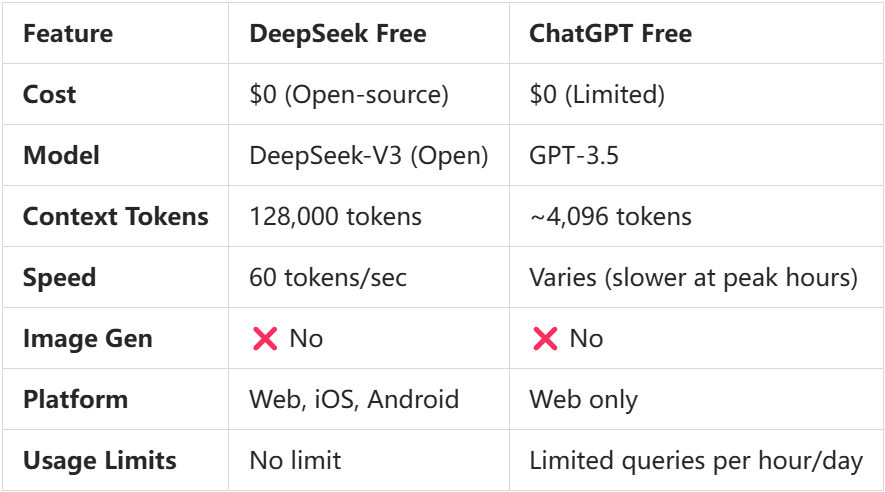

User Cost Comparison:

- ChatGPT: Personal subscription costs $20/month; enterprise API calls cost approximately $0.002 per thousand tokens, though complex tasks may consume more computational resources.

- DeepSeek: Open-source versions are free, allowing users to download and deploy locally. Cloud API pricing is about 1/3 of ChatGPT’s ($0.0006 per thousand tokens). Additionally, DeepSeek employs a Mixture of Experts (MoE) architecture, reducing inference energy consumption by 70%. For personal users and small enterprises, DeepSeek’s free open-source version meets most needs.

Final Thoughts: which one is better? Try it yourself!

No single AI model fits all scenarios, that's why Monica platform is our ideal choice. Monica is an open AI platform that integrates multiple models such as DeepSeek, ChatGPT, and Claude, Gemini... allowing me to explore and utilize the combined advantages of these models:

DeepSeek Models:

- DeepSeek R1: Focused on reinforcement learning, it excels in autonomous exploration and logical reasoning, ideal for tasks requiring deep analysis, such as mathematical proofs and code generation.

- DeepSeek V3: Utilizing a Mixture of Experts (MoE) model, it performs exceptionally well in large-scale tasks while ensuring efficient resource utilization, making it particularly suitable for solving complex problems.

ChatGPT Models:

- GPT-4: One of the most mature general-purpose models, known for its stability, suitable for daily conversations, writing assistance, and knowledge Q&A.

- o1-preview: The first reasoning-capable language model, offering more thoughtful answers through simulated reasoning processes, ideal for solving complex problems.

- o1-mini: A lightweight version of o1-preview, more affordable yet still powerful, particularly for advanced reasoning in daily tasks.

- o3: Compared to the o1 series, the o3 model further enhances reasoning capabilities, specifically designed for complex tasks. It employs “simulated reasoning,” a technique mimicking human reasoning processes. By pausing and reflecting on internal thoughts, o3 analyzes problems more deeply, surpassing traditional “Chain of Thought” (CoT) prompting, offering advanced autonomous analysis and reflection capabilities.

Hybrid Intelligence: The Future of AI

Whether it’s the o1 series, o3, ChatGPT, or DeepSeek, each model provides solutions for different needs. The future winner may not be a single leading technology but rather those who master the art of leveraging various models’ strengths in “hybrid intelligence” practices. As open-source technologies continue to evolve, this competition may redefine the power dynamics of the AI industry.

In this fast-changing era, no single model can meet everyone’s needs, but by selecting the right tools, AI can truly become a driving force for innovation.

Reference: