Wan 2.1 vs Sora: Reshaping AI Video Generation

I compared Alibaba's Wan 2.1 and OpenAI's Sora in terms of technology, features, performance, and applications in this article.

🌟 1. Introduction: The Future of AI Video Generation is Here!

Remember the first time we saw AI-generated images? It was mind-blowing! At that moment, we knew video generation was the next big frontier. And now, that future has arrived—faster and more impressive than we ever imagined.

Two AI giants are leading the way in video generation:

🎬 Wan 2.1, Alibaba’s open-source rising star, and

🎬 Sora, OpenAI’s closed-source powerhouse.

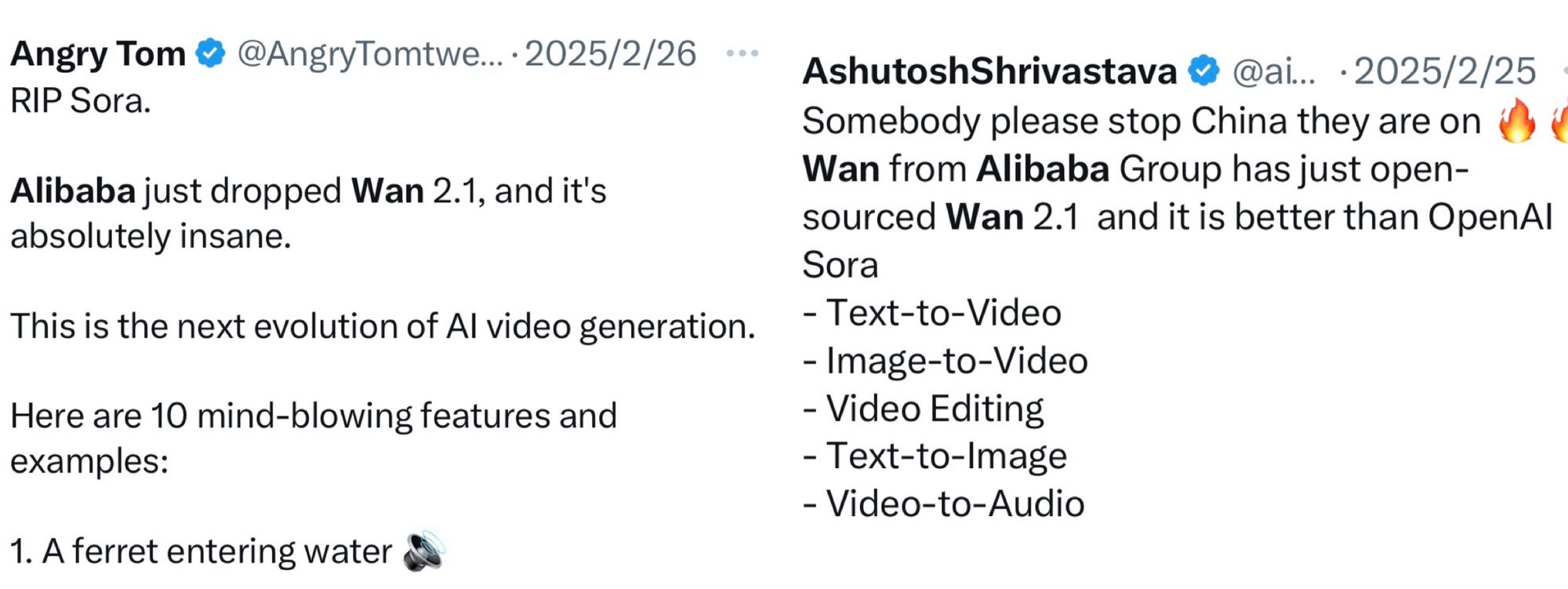

Since its release on the 25th of last month, Wan 2.1 has taken the internet by storm. Its ultra-realistic videos have amazed users, with many calling it the new king of AI-generated videos. Some are even saying that Sora is already outdated in comparison! If you're interested in Wan 2.1 but don't want to go through the complicated deployment and installment, Monica offers a trial feature for Wan 2.1. Click here to experience the magic of Wan 2.1 instantly!

In this blog, we’ll dive deep into Wan 2.1 vs. Sora, comparing their technology, capabilities, and future potential. Is Wan 2.1 truly the next revolution in AI video generation? Is Sora falling behind, or does it still have an edge?

Buckle up—we’re about to embark on an exciting journey into the cutting-edge world of AI video creation! 🚀

🎥 2. Overview of AI Video Generation

Before we compare Wan 2.1 and Sora, let’s first break down how AI video generation actually works.

✍️ 2.1 Text-to-Video: Bringing Words to Life

At its core, Text-to-Video (T2V) technology transforms text descriptions into visual motion sequences. Think of it as a three-step process:

1️⃣ Understanding the Text:

The AI first analyzes the words to recognize objects, actions, settings, and emotions. This requires advanced language processing skills.

2️⃣ Creating Visuals:

The AI translates the text into a scene with characters, backgrounds, lighting, and motion. The hardest part? Making objects move realistically while obeying the laws of physics.

3️⃣ Generating Motion Over Time – The AI must ensure that the video flows smoothly, with consistent characters and movements that make sense over time.

Sounds simple? Not at all! Imagine typing:

📝 “A cat jumps off a couch.”

The AI must understand:

🐱 What a cat looks like

🛋️ What a couch looks like

🔄 How a cat jumps (physics, gravity, and muscle movement)

🎞️ How the cat’s fur reacts while jumping

That’s a LOT of knowledge packed into a few words!

🖼️ 2.2 Image-to-Video: Bringing Pictures to Life

Unlike T2V, Image-to-Video (I2V) starts with a static image and adds motion. It’s like turning a photograph into a living scene—a person starts talking, a tree’s leaves begin to sway, or a car starts driving.

The biggest challenge? Filling in lithe missing details. If an image only shows a person’s front, the AI must guess what their back looks like when they turn. This requires deep knowledge of human anatomy, fabric movement, and lighting effects.

⚠️ 2.3 Challenges in AI Video Generation

Even with today’s breakthroughs, AI-generated videos still face major challenges:

🕰️ Time Consistency: Keeping characters and objects stable across frames to avoid weird glitches.

🌍 Physics Realism: Ensuring actions follow the laws of physics (e.g., objects don’t float unless they should!).

🔍 Detail Preservation: Keeping fine details sharp, especially for textures, facial expressions, and small objects.

📖 Story Coherence: Ensuring the video tells a logical and engaging story, not just random visuals.

💻 Computational Power: AI video generation is resource-intensive. Making it efficient and accessible is a huge challenge.

🌟 2.4 Why Are Wan 2.1 and Sora So Hyped?

Among many AI video models, Wan 2.1 and Sora stand out because:

🔹 Different Philosophies: Wan 2.1 is open-source, promoting collaboration & accessibility, while Sora is closed-source, focusing on proprietary innovation.

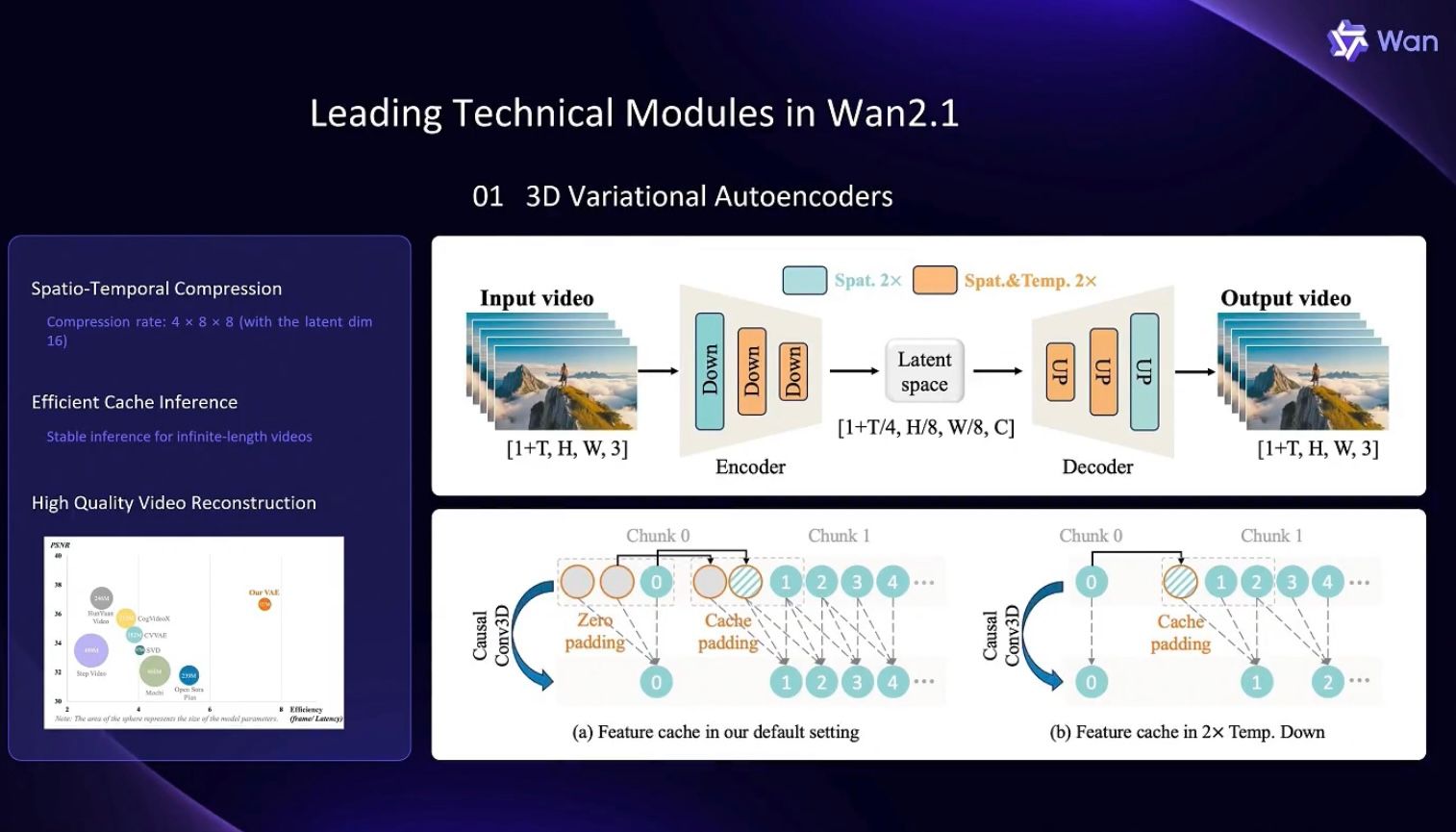

🔹 Breakthrough Technologies: Wan 2.1 uses Spatio-Temporal Variational Autoencoders, while Sora employs spatiotemporal patching—both pushing AI video quality forward.

🔹 Backed by Tech Giants: Alibaba and OpenAI are heavily investing in these models, making them more than just research projects—they’re shaping the future of content creation.

As we explore these models in detail, you’ll see their unique strengths—like comparing a martial arts movie to a sci-fi blockbuster. They both dominate AI video generation, but in different ways.

🎬 3. Wan 2.1: The Open-Source Video Revolution

In the AI video arena, Wan 2.1 is like a martial arts master—skilled, open-minded, and willing to share its secrets with the world. Unlike its competitors, it embraces open-source principles, allowing developers and creators worldwide to experiment and improve upon it.

🧙♂️ 3.1 The Magic Behind Wan 2.1

Wan 2.1’s core technology includes:

🔹 Spatio-Temporal Variational Autoencoders (ST-VAE): Compresses videos into a compact format, allowing for high-quality, long-duration videos. Wan 2.1 takes this even further by compressing videos 256 times smaller—allowing it to generate longer and higher-resolution videos with minimal effort.

🔹 Diffusion Transformer (DiT): A powerful AI brain that understands prompts and generates coherent, detailed videos.

🔹 Multilingual Support (UMT5): Handles both English & Chinese seamlessly, making AI video creation more accessible worldwide.

🔹 Flow Matching Sampling: Ensures fast and stable training, so the model generates high-quality videos quickly.

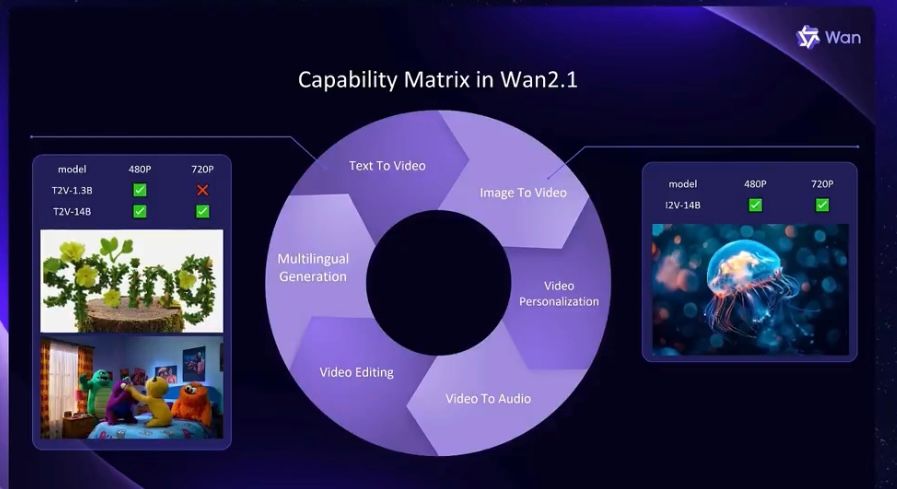

3.2 The Three Warriors: Wan 2.1’s Model Variants🏆

Wan 2.1 comes in three variations:

1️⃣ Wan 2.1-I2V-14B: Focuses on image-to-video transformation, supports 480p & 720p, and creates complex animated scenes.

2️⃣ Wan 2.1-T2V-14B: A powerful text-to-video model that accepts both English and Chinese prompts.

3️⃣ Wan 2.1-T2V-1.3B: A lightweight model optimized for consumer-grade GPUs, generating 480p videos with just 8.19GB VRAM.

With a massive dataset of 1.5 billion videos and 10 billion images, Wan 2.1 is trained on an unprecedented scale, making it one of the most powerful AI video generators to date.

🎬 3.3 How Wan 2.1 Became a Video Genius

Like a great filmmaker learning from thousands of movies, Wan 2.1 was trained on a massive dataset—1.5 billion videos and 10 billion images! This gave it an incredible ability to understand a wide range of scenes, actions, and artistic styles.

But it didn’t learn everything all at once—it went through a six-stage training process, just like a student progressing from elementary school to college:

📌 First, it learned from simple 256p images.

📌 Then, it started mixing in low-resolution videos to get used to motion.

📌 As training progressed, it handled longer and more complex videos.

📌 Finally, it reached the advanced level, generating high-quality 480p and 720p videos.

📌 The last step was fine-tuning, using only the best, carefully selected data for top-tier quality.

🛡️ Keeping it Smart: Data Filtering for Top-Notch Quality

Not all data is useful for training—bad-quality images or misleading text descriptions can confuse an AI model. To avoid this, Wan 2.1’s team carefully filtered the data in four key ways:

✅ Removing low-quality or inappropriate content.

✅ Balancing different categories of images and videos.

✅ Scoring motion quality to ensure smooth, realistic animations.

✅ Using smart text-mining techniques to make sure text descriptions match the images.

Thanks to this rigorous process, Wan 2.1 learned not just to generate videos—but to make them look natural, smooth, and visually appealing! 🎥✨

3.4 How to Use Wan 2.1: A Beginner-Friendly Guide

So, you’ve heard about Wan 2.1, the AI video wizard 🧙♂️✨, and now you’re wondering: How do I use it to create my own magical videos? Don’t worry—it’s easier than you think! Whether you’re a developer, a creator, or just someone who wants to experiment with AI, here’s a step-by-step guide to getting started.

🛠️ Step 1: Pick Your Setup – Cloud or Local?

Wan 2.1 gives you two ways to use it—depending on whether you want full control or a hassle-free experience:

✅ Cloud Deployment (Easy Mode) ☁️

- If you don’t have a powerful computer, you can run Wan 2.1 on cloud services like Alibaba Cloud.

- No need to install anything—just access it through APIs and start generating videos!

- Best for: Non-technical users & creators who just want quick results.

✅ Local Deployment (Advanced Mode) 💻

- Want full control? You can install Wan 2.1 on your own computer if you have a strong enough GPU.

- Requires Python and a compatible GPU with enough VRAM (at least 8.19GB for the lightweight model).

- Best for: Developers, AI enthusiasts, and those who love to tweak settings.

📥 Step 2: Install Wan 2.1 Locally (For Tech-Savvy Users)

If you choose to run Wan 2.1 on your computer, follow these steps:

1️⃣ Set Up Your Environment

- Install Python (if you don’t have it already).

- Install PyTorch (a deep-learning framework).

2️⃣ Download the Model

- Clone the Wan 2.1 repository from GitHub.

- Download the required pre-trained models (these contain all the AI’s knowledge).

3️⃣ Run a Test

- Use simple commands to generate a short video from text or an image.

- Adjust settings like frame rate, duration, and resolution.

✍️ Step 3: Choose Your Input – Text or Image?

Wan 2.1 is super flexible when it comes to input. You can generate videos using:

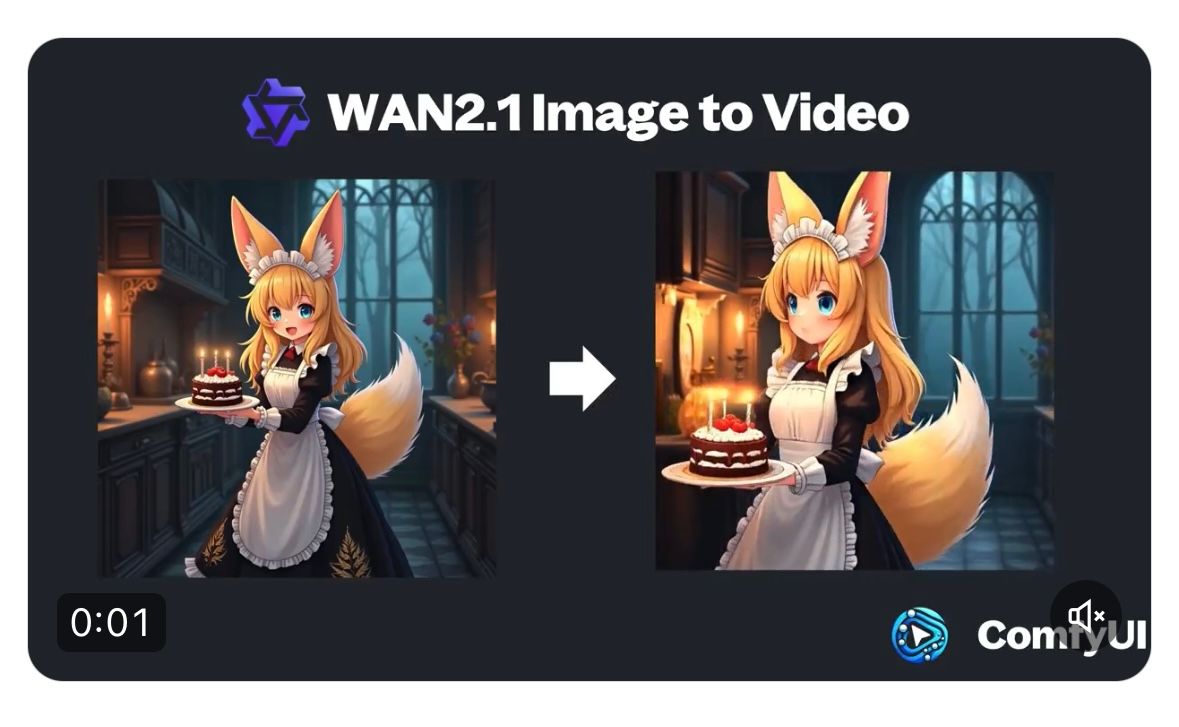

🎨 Image-to-Video (I2V) – Upload a picture, and Wan 2.1 will bring it to life!

✍️ Text-to-Video (T2V) – Just type a description, and it will generate a video based on your words.

👉 Example 1: Text to Video

Input: “A lovely girl sits on a tree trunk, cradling a warm cup of tea as snow gently falls around her.”

Result: A beautiful animated scene, the girl's hair was blowing in the wind, and the heat was rising from the tea cup.

👉 Example 2: Image to Video

Input: A little girl dressed as a fox maid carries a cake.

Result: The little girl is walking towards you with a cake in her hand!

🎨 Step 4: Customize Your Video

Now comes the fun part—tweaking your video settings to get the best results!

🔹 Choose a model variant (I2V-14B, T2V-14B, or the lightweight T2V-1.3B).

🔹 Adjust video length – Want a short clip or a longer sequence?

🔹 Select resolution – 480p or 720p (higher resolution takes more power).

🔹 Fine-tune motion – Control how smooth and realistic the animation looks.

🚀 Step 5: Generate & Save Your Masterpiece

Once you’re happy with your settings, just hit generate, sit back, and watch the magic happen! ✨

✅ Preview the video.

✅ Make adjustments if needed.

✅ Download & share your AI-powered creation!

4. 🎬 OpenAI’s Sora: Redefining Video Creation

Launched in December 2024 for ChatGPT Plus and Pro subscribers, Sora is already making waves in the world of AI-generated videos. From filmmakers to digital marketers, educators, and creative hobbyists, everyone is talking about it. And with its recent expansion into Europe, the buzz has only intensified.

As a text-to-video model, Sora can generate highly detailed, dynamic videos up to 20 seconds long—featuring complex camera movements, multiple characters, and lifelike emotions. Whether you're looking to prototype a film scene, craft viral social media clips, or create immersive educational content, Sora provides an accessible, cost-effective solution.

🔮 4.1 The Magic Behind Sora’s Technology

Sora’s power lies in a revolutionary blend of diffusion models and transformer architectures. Unlike traditional frame-by-frame video generation, Sora treats a video as a four-dimensional puzzle, considering both space and time simultaneously.

Imagine this:

🎨 Traditional AI video models are like painters, crafting each frame one by one and hoping they blend smoothly.

🗿 Sora, however, is like a sculptor, shaping an entire 4D structure from the start, ensuring a seamless fusion of movement, physics, and visual consistency.

This approach allows Sora to "predict" future frames, maintaining coherence across complex motions—even when objects move, disappear, or interact dynamically with their environment.

But here’s where it gets even more impressive:

Sora doesn’t just generate visuals—it understands the physics of the world. It has been trained on millions of videos, learning how:

💦 Water splashes realistically

☀️ Light creates shadows

🚶 Humans walk, run, and interact naturally

Essentially, Sora has an internal "physics engine", allowing it to create incredibly lifelike videos—though, of course, occasional quirky physics errors still pop up!

🎨 4.2 The Creative Toolbox: Sora’s Features & Interface

Sora’s intuitive interface makes AI video generation accessible to everyone—even users with zero experience in video editing. At its core, you simply type a text prompt, and Sora brings it to life.

But the real magic? Sora’s advanced creative tools:

✨ Remix – Modify existing videos using new prompts while keeping the core scene intact. Change the lighting, outfits, or weather with a single command!

✂️ Recut – Extract the best parts of an AI-generated video to create new clips. It’s like having an AI film editor on demand.

🔄 Loop – Create seamless looping videos, ideal for background animations or immersive experiences.

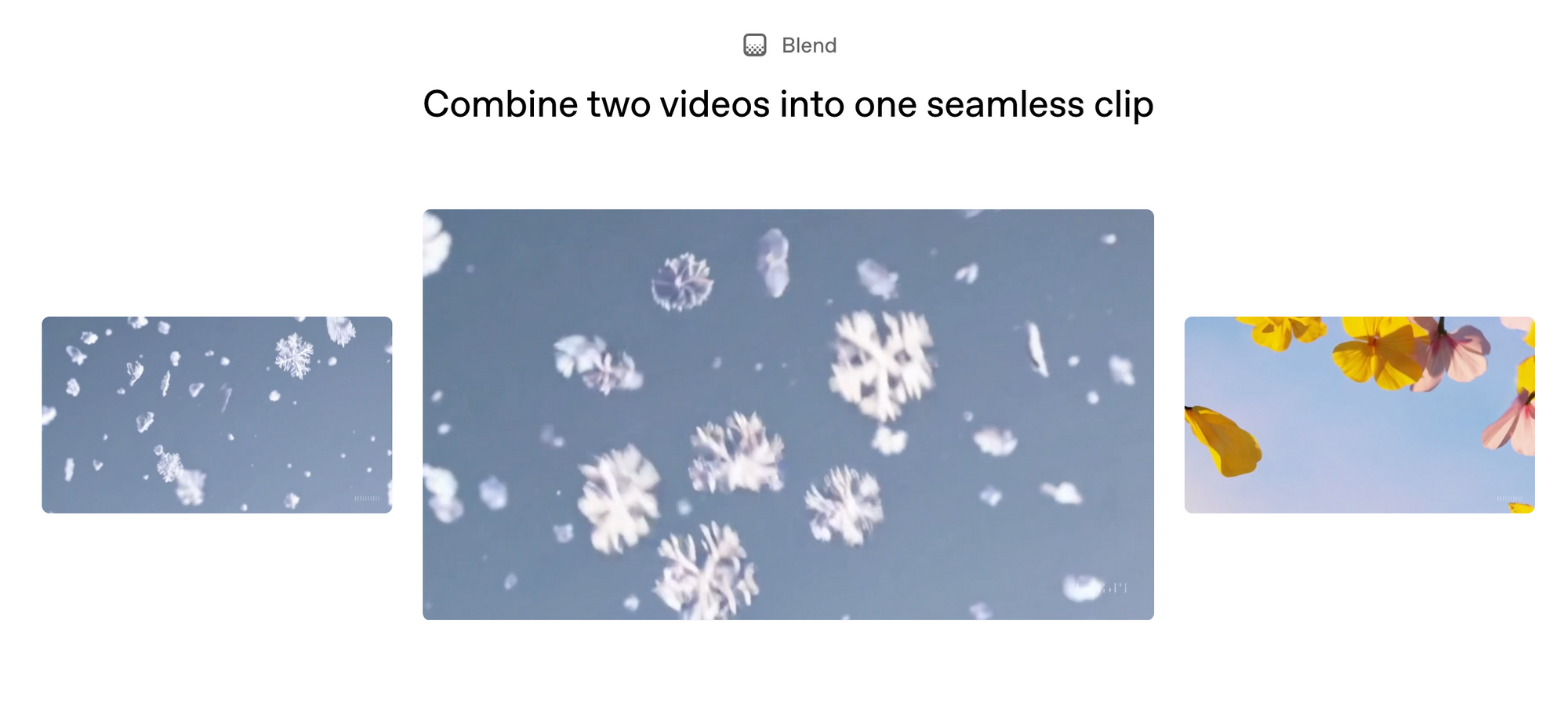

🎭 Blend – Merge two different videos to create hybrid content with mixed styles and elements.

📽️ Storyboard – Perhaps Sora’s most powerful tool. Users can combine multiple AI-generated clips into a cohesive film, ensuring consistent characters, scenes, and storytelling across different shots.

And here’s something cool—Sora embraces a community-driven approach (similar to Midjourney). Users can explore public AI-generated videos, view the exact prompts used, and remix them for their own creations!

💰 4.3 Subscription Model: Plus vs. Pro

Sora offers two subscription tiers, catering to both casual creators and professional filmmakers.

🥉 ChatGPT Plus (~$20/month)

✔️ Up to 1,000 video credits per month (~50 priority videos)

✔️ Maximum 720p resolution

✔️ Visible watermark on videos

✔️ Basic editing tools (Text Input, Remix)

🏆 ChatGPT Pro (~$200/month)

🔥 Up to 10,000 video credits per month (~500 priority videos)

🔥 Higher resolution (1080p) & longer videos (up to 20 seconds)

🔥 Option to download watermark-free videos

🔥 Access to advanced tools (Recut, Storyboard, Loop, Blend)

This tiered model ensures that hobbyists, indie creators, and professionals all have a pricing plan that fits their needs. Casual users can experiment affordably, while professionals can produce high-quality, AI-generated content at scale.

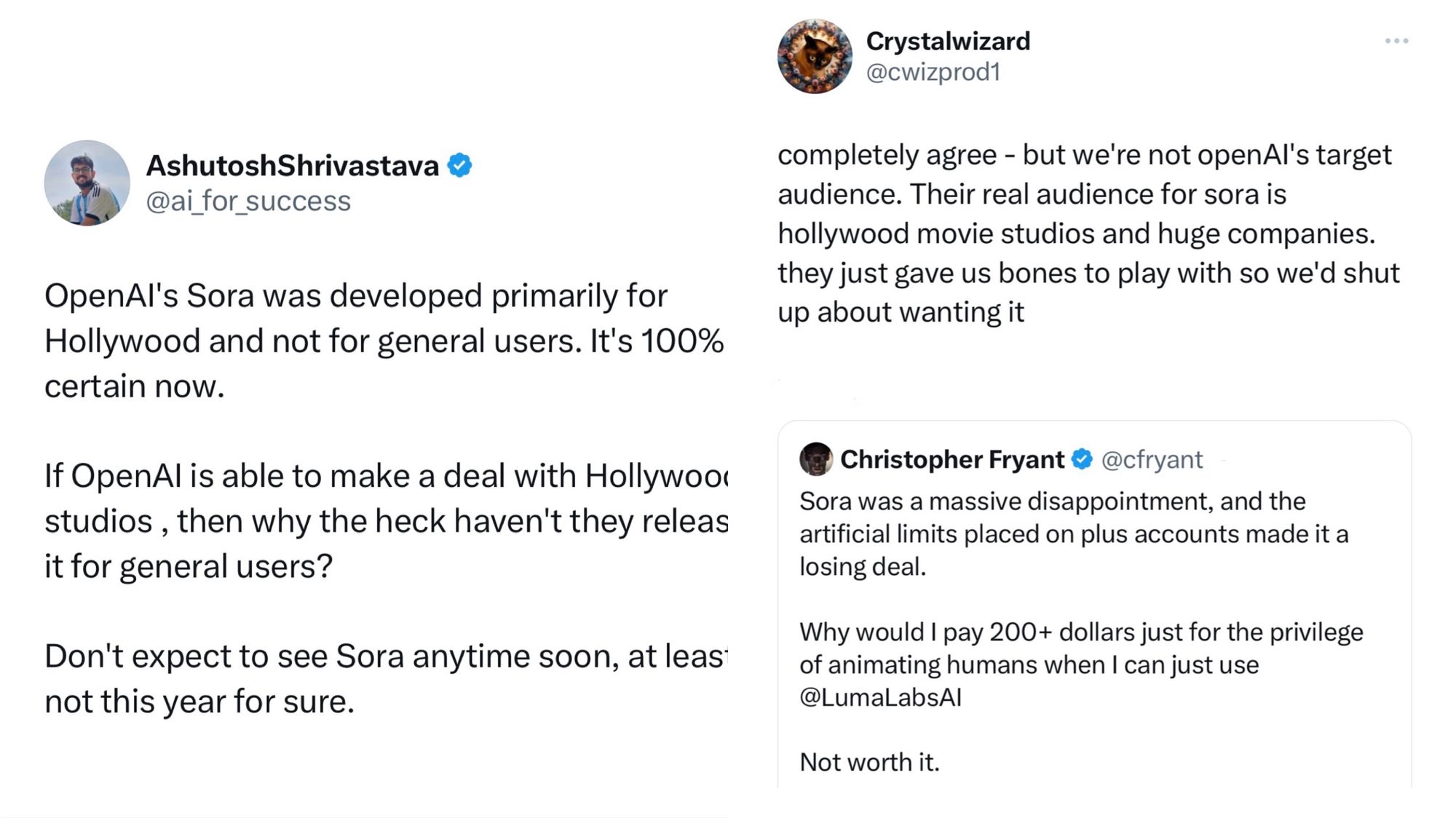

🎭 4.4 User Reactions: A Game-Changer for Video Creators

Since its release, OpenAI has collaborated with visual artists, designers, and filmmakers to explore how Sora can enhance creativity. Many early adopters are already pushing the boundaries of AI-assisted filmmaking.

One standout example? 🎈 Shy Kids, a Toronto-based multimedia production company. For their short film “Air Head”, they used Sora to generate a balloon-headed character exploring different worlds. With Sora in their creative arsenal, Shy Kids went full throttle on imagination while making Air Head. But generating their balloon-headed protagonist wasn’t as simple as just typing a prompt and hitting "go." They experimented over and over again—tweaking color tones, fine-tuning movements, and layering in the perfect soundtrack.

🎥 "We now have the ability to expand on stories we once thought impossible," says Walter Woodman, director of Air Head.

But here’s the exciting part—Sora isn’t just about realism. It’s a tool for surrealism, abstract expressionism, and artistic experimentation.

🖌️ “As impressive as Sora is at making things appear real, what excites us even more is its ability to create things that are totally surreal. We’re entering a new era of AI-powered storytelling.”

Sora isn’t just a budget-friendly filmmaking assistant; it’s a game-changer for small production teams, opening up a whole new creative playground for the film industry. As filmmakers embrace AI, they’re also rethinking their roles—blending human vision with machine intelligence in ways never seen before. In a world where big data meets personal storytelling, AI-assisted filmmaking isn’t just a tool—it’s the new frontier of cinema. 🎬✨

5. Head-to-Head Showdown: Wan 2.1 vs. Sora

Now that we’ve explored Wan 2.1 and Sora individually, it’s time for the ultimate AI video generation showdown! These two powerhouses have been making waves in the industry, but how do they stack up when given the same prompt?

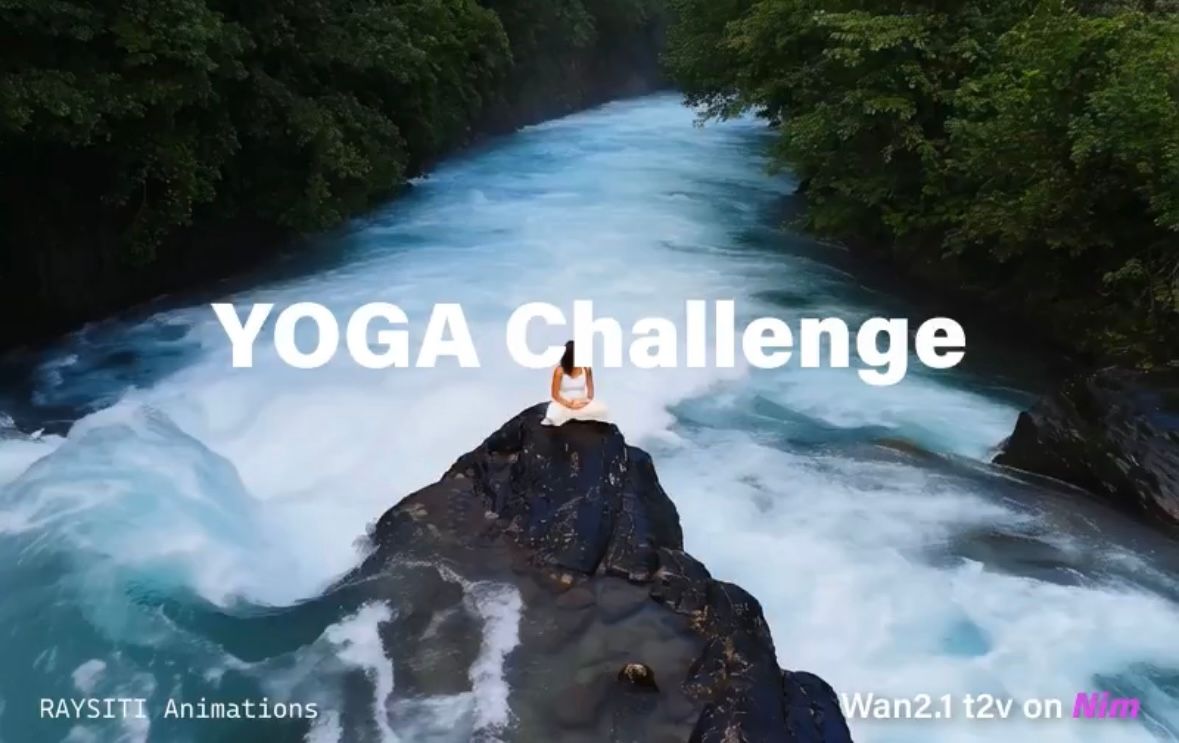

Before diving into the detailed comparison, check out this video to see how both models interpret the same scene. Sometimes, seeing is believing! 👀🎥

Battle of the AI Directors: A Scene-by-Scene Breakdown

For this test, we used the following prompt:

An aerial drone camera orbits a beautiful lady in a flowing white dress, sitting on a jagged rock amid a violent river, performing yoga. She rises from the sitting position, stretches both arms and her left leg, balancing on her right leg.

Both models nailed the scene composition—the raging river, jagged rocks, and splashing water all look incredibly realistic, as if filmed on location. But the differences start to show when we analyze motion and realism:

Motion Accuracy 🏃♀️

- Wan 2.1 follows the prompt to the letter—our yogi begins in a sitting position before gracefully stretching her arms and extending her left leg.

- Sora, on the other hand, interprets the prompt differently. Instead of starting from a seated pose, the model generates a smooth transition from a standing yoga stance. While fluid, the action appears too perfect—almost robotic.

Human-like Imperfections 🤔

- One of Wan 2.1’s standout qualities is its subtle imperfections. When the woman raises her leg, there’s a tiny, natural wobble—something a real person might do while balancing. This adds a touch of realism.

- Sora’s motion, while elegant, seems too controlled, as if the character is weightless. The transitions are seamless, but perhaps too flawless to feel truly human.

Cinematography & Color Grading 🎬

- Wan 2.1 delivers a cinematic result with a stunning deep blue river, lush green foliage, and a grand central rock—a scene straight out of a movie.

- Sora’s version is visually polished but lacks the moody, dramatic depth that makes Wan 2.1’s version stand out.

So, which one do you prefer? If you’re curious, try running the same prompt through both Wan 2.1 and Sora and see which style suits your creative vision!

Comparing the Two: A Feature-by-Feature Breakdown

| Feature | Alibaba Wan 2.1 (Open Source) | OpenAI Sora (Closed Source) |

| Source | ✅Open Source | ❌Closed Source |

| Capabilities | ✅Text-to-video, image-to-video, video editing, text-to-image, video-to-audio | ✅High-quality text-to-video synthesis, creative outputs |

| Video resolution | ✅Supporting 480p,720p | ✅Up to 1080p resolution |

| Language Support | ✅Supports multi-language content | ⚠️Primarily English |

| Performance on VBench | ✅Consistently outperforms on benchmarks (e.g., motion smoothness, temporal consistency) | ✅Strong performance but lower than Wan 2.1 |

| Hardware Requirements | Optimized for various GPUs; consumer-friendly variant available (e.g., T2V-1.3B requires ~8.19GB VRAM) | ✅Cloud running, no local requirements |

| Local Deployment | ✅Supporting local deployment | ❌Not Supporting local deployment |

| Customization & Flexibility | Highly customizable and community-driven | Limited customization options |

From the above table, we can see that Alibaba Wan 2.1 and OpenAI Sora have great differences in tool positioning. If Alibaba Wan 2.1 and OpenAI Sora were AI film directors, they would have completely different styles! Wan 2.1 is like an indie filmmaker—open-source, adaptable, and designed for efficiency. It runs smoothly on a wide range of hardware, even on lower-end GPUs, making it a favorite among developers and tech enthusiasts. Using a 3D VAE + DiT architecture, it optimizes memory usage while ensuring smooth motion and consistent object tracking. In some areas, like physics simulation and motion fluidity, it even outperforms Sora. But what really sets Wan 2.1 apart is its versatility—it’s not just a text-to-video tool; it also supports image-to-video, video editing, text-to-image, and even video-to-audio conversion, with both English and Chinese input support.

On the other hand, Sora is like a Hollywood-level filmmaker, focusing on high-definition visuals and seamless storytelling. Unlike traditional models that generate videos frame by frame, Sora treats the entire video as a whole, ensuring strong narrative coherence. Its 1080p video quality is stunning, and its creative toolkit—including features like Remix, Recut, Loop, Blend, and Storyboard—makes it a powerful tool for professional artists. However, Sora has its drawbacks: it only supports cloud-based generation, lacks strong multilingual capabilities, and requires a paid subscription—$20 per month for Plus and $200 for Pro. For casual creators, the high cost might be a dealbreaker, and some users feel that Sora is geared more toward film studios than individual artists.

In the end, Wan 2.1 is the go-to choice for those who love flexibility and efficiency, while Sora is perfect for those seeking top-tier video quality and advanced creative tools. The decision ultimately depends on whether you’re a tech-savvy tinkerer or a digital artist looking to push the boundaries of AI filmmaking.

6. Real-World Applications: Putting AI Video Models to the Test

While theoretical comparisons are useful, the real value of AI video generation models lies in how they perform in practical applications. Just like a sports car, no matter how impressive the specs are, it ultimately needs to prove itself on the road. So, let’s take a look at how Wan 2.1 and Sora fare in different real-world scenarios.

6.1 Social Media Content Creation: Eye-Catching Short Videos

Short videos have taken over platforms like TikTok, Instagram, and Weibo, making AI-generated content a secret weapon for creators looking to grab attention.

- Wan 2.1: Its multilingual support makes it especially appealing for global social media creators. Chinese-speaking users can input prompts directly in their native language without worrying about translation accuracy. The smooth motion and realistic physics simulation also make it ideal for content that requires authenticity, such as product showcases and environment simulations.

- Sora: Where Sora shines is in its artistic creativity. Its videos often have a unique visual flair, making it great for creative marketing and artistic expression. Imagine how popular Sora will be on social media if the crazy illusion is realized in a short video of ten seconds. See how Sora impresses everyone with its stunning creativity in TikTok here.

Best Choice: If you need realistic visuals and multilingual support, Wan 2.1 might be the better option. But if you’re focused on artistic expression and creative storytelling, Sora has the edge. Many professional creators may even choose to use both tools, depending on their specific needs.

6.2 Film and Video Production: Previsualization and Prototyping

In professional filmmaking, pre-production planning is crucial. Traditionally, storyboarding and previsualization require a lot of time and effort from artists and animators, but AI-generated videos are transforming this process.

- Wan 2.1: It excels in generating complex, physics-accurate scenes, making it a strong choice for action sequences and VFX previews. Directors can experiment with camera angles and motion choreography without expensive live shoots. Its open-source nature also allows for customization, which is particularly valuable for large-scale productions.

- Sora: With its Storyboard feature, Sora is an ideal tool for testing narrative flow. Directors can generate multiple interconnected scenes to explore different pacing and visual styles. Its high-resolution output (up to 1080p) makes it easier to visualize what the final product will look like. Reports have even suggested that OpenAI has ambitions to break into Hollywood, shaking up the film industry with Sora’s capabilities.

This short animation generated by Wan2.1 showcases impressively smooth transitions, fluid character movements, and a consistently cohesive art style. Even subtle hints of an intriguing storyline emerge, demonstrating the model's strong grasp of spatial and temporal consistency. If integrated into real-world production, Wan2.1 has the potential to captivate audiences effortlessly. It’s fair to say that this technology could be a true game-changer, poised to revolutionize the entire film industry!

6.3 Marketing and Advertising: Creating Attention-Grabbing Visuals

In an age of information overload, brands need high-quality, visually compelling content to stand out. AI-generated videos are becoming a game-changer for marketers.

- Wan 2.1: It performs exceptionally well in product visualization and scene simulation. Brands can quickly generate dynamic product demonstrations in different environments without costly shoots. Its open-source framework also allows marketing teams to integrate it into existing workflows for more efficient content production.

- Sora: When it comes to creative storytelling and emotional engagement, Sora takes the lead. It can generate visually striking, emotionally resonant content—perfect for brand storytelling and high-impact advertising. Its high-resolution output meets the standards of luxury and high-end brands that require polished visuals.

This is a toy commercial created by Sora, featuring flawless voiceovers and music that bring a dreamy toy world to life. Every detail, from the vibrant colors to the smooth character animations, enhances the sense of wonder, making it feel like stepping into a child’s imagination. The seamless integration of AI-driven storytelling and visually captivating scenes makes the toys even more irresistible. I believe no child could watch this short film without being completely enchanted. Sora’s ability to merge fantasy with reality showcases how AI is revolutionizing advertising, crafting experiences that not only capture attention but also spark genuine excitement and desire. AI’s boundless imagination and creativity seem to be the perfect match for the playful and magical nature of toys!

6.4 Enterprise and Developer Applications: Integration and Customization

Beyond creative content, AI video generation has enormous potential for enterprise applications, from product design to customer service and training.

- Wan 2.1: Its open-source framework gives businesses full control over customization and integration. Companies can modify the model to fit specific needs, integrate it into internal systems, and even fine-tune it using private datasets. The availability of API access makes it a powerful tool for developers looking to build custom applications.

- Sora: Its cloud-based, user-friendly interface makes it an attractive choice for teams that lack technical expertise. Companies can deploy it quickly without worrying about hardware requirements. OpenAI’s ongoing updates and support also ensure stability for enterprise users.

Best Choice: For tech-driven companies that require deep integration and customization, Wan 2.1 is the better choice. But for teams that prioritize ease of use and quick deployment, Sora offers a more accessible solution. Large enterprises might find value in using both tools across different departments and projects.

7. Conclusion: Two Paths in AI Video Generation

Throughout this in-depth comparison of Wan 2.1 and Sora, we've explored two leading AI video generation models, each representing a fundamentally different approach to technology and business. Now, it’s time to summarize our findings and leave you with some final thoughts.

Two Models, Two Philosophies

Wan 2.1, Alibaba’s open-source innovation, showcases the immense potential of a collaborative ecosystem. With its multilingual support, diverse model variants, and strong physics simulation, it excels in various applications. Most importantly, its open-source nature gives developers and enterprises unparalleled flexibility and customization options—an invaluable asset in the fast-evolving AI landscape.

Sora, OpenAI’s closed-source product, represents the power of refined user experience. With its intuitive interface, rich editing tools, and strong creative capabilities, it has become a powerful assistant for content creators. While it operates on a subscription model, its cloud-based deployment and continuous updates ensure convenience and reliability for users.

Rather than determining which is better, this comparison highlights two distinct technological and business philosophies. Wan 2.1 prioritizes openness and flexibility, while Sora focuses on accessibility and innovation. Both have their strengths and limitations, catering to different user needs.

Final Thoughts

Wan 2.1 and Sora reflect the current state of AI video generation, but they are merely snapshots of a rapidly evolving industry. In the coming years, we can expect even more powerful, user-friendly, and cost-effective tools to emerge—lowering barriers to video creation and unlocking new creative possibilities.

Regardless of how the technology advances, human creativity remains at the core. AI video generation tools—whether Wan 2.1 or Sora—are designed to empower creators, not replace them. Their true value lies in how they help us tell better stories, evoke deeper emotions, and craft richer visual experiences.

As we step into this new era where AI and human creativity intertwine, the most exciting part isn't the technology itself, but how it will reshape the way we express and share ideas. Whether you choose the open-source flexibility of Wan 2.1 or the polished experience of Sora, the key is to start exploring, start creating, and become part of this visual revolution.